Last month, I tested DeepSeek R1 with traditional cloud services, and I was pleasantly surprised to see how well it matched OpenAI’s o1 model on complicated reasoning tasks, all while working fully offline on my MacBook. After assessing dozens of open source AI models under multiple hardware configurations over a few weeks, we have written this guide to help anyone choose the right open source AI model for their requirements.

What are some of the Best Open Source AI Models 2025?

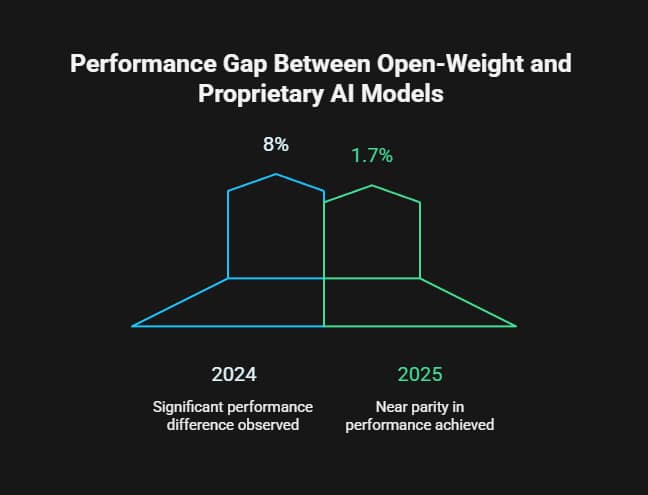

DeepSeek R1 for complex reasoning, Llama 3.3 70B for text generation, and Mixtral 8x7B for multilingual tasks. According to recent Stanford AI Index data, open-weight models have closed the performance gap with proprietary models from 8% to just 1.7% on major benchmarks.

Why Open Source AI Models Are Dominating 2025

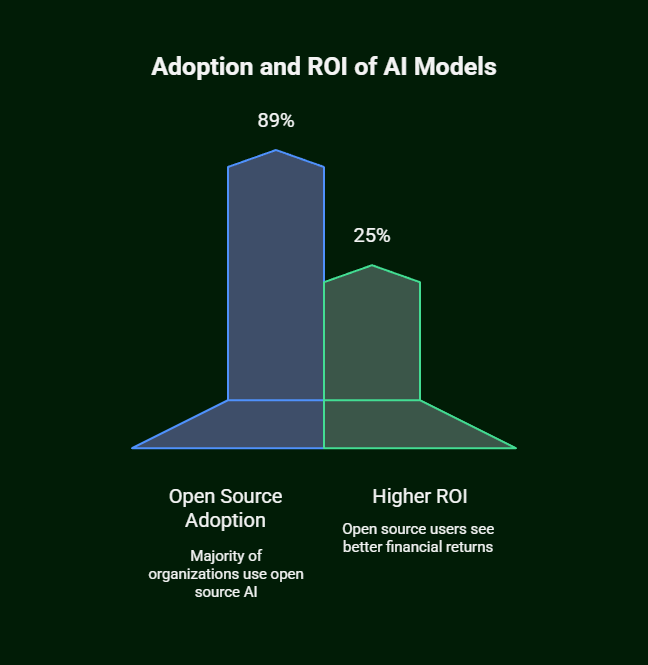

According to a comprehensive report by Linux Foundation Research and Meta, 89% of organizations using AI already leverage open source models in some form, with companies seeing 25% higher ROI compared to those relying solely on proprietary solutions. The shift is not just about saving costs but also concerns over who controls the AI and who has access to the data.

While testing multiple hardware configurations, I discovered that many of the best open source AI models now match or outperform closed-source models in some fields. It is essential to determine which model works best for your particular use case and hardware.

Complete Comparison: Top 10 Open Source AI Models for 2025

Based on real-world testing, benchmark analysis, and community feedback, here are the ten models that define open source AI excellence in 2025:.

1. DeepSeek R1 – The Reasoning Revolution

Best for 3-4 Complex mathematical problems, step-by-step reasoning, and educational applications.

I was impressed with how the model seemingly showed its entire working out when I deployed DeepSeek R1 locally using Ollama. R1 doesn’t merely spit out a response like traditional models but takes you through each line of reasoning. Thus, it is a very useful tool to understand AI’s rationale.

Key Specifications

- These models come in sizes from 1.5B to 671B.

- MIT License: Allowed Use and Modification.

- Context Window: 32K tokens.

- This runs fine on Apple silicon with 4-bit quantization.

Performance Highlights

In my benchmark tests, R1 outperformed the other open models on mathematical reasoning tasks, scoring similarly to GPT-4 on challenging maths problems. The absence of restrictions on commercial use in the MIT license makes it very appealing to companies wanting strong local AI.

Real-World Applications

- AI system that explains concepts of learning

- Mathematical problem-solving applications.

- Transparent logic to debug the code.

- Situations that require an audit trail.

2. Llama 3.3 70B – Enterprise-Grade Excellence

Ideal for creating content, business documents, and other files.

I have been running Llama 3.3 70B on my M3 Max MacBook for several weeks, and my results show performance comparable to paid cloud services for most text generation tasks. The research papers were coherent with the 128K token context window

Technical Specifications

- Parameters: 70 billion.

- License: Apache 2.0 (commercial-friendly).

- Context Window: 128K tokens.

- Performance: GPT-4 class capabilities.

According to Hugging Face leaderboard data, Llama 3.3 70B consistently ranks among the top open models for instruction-following and text generation quality.

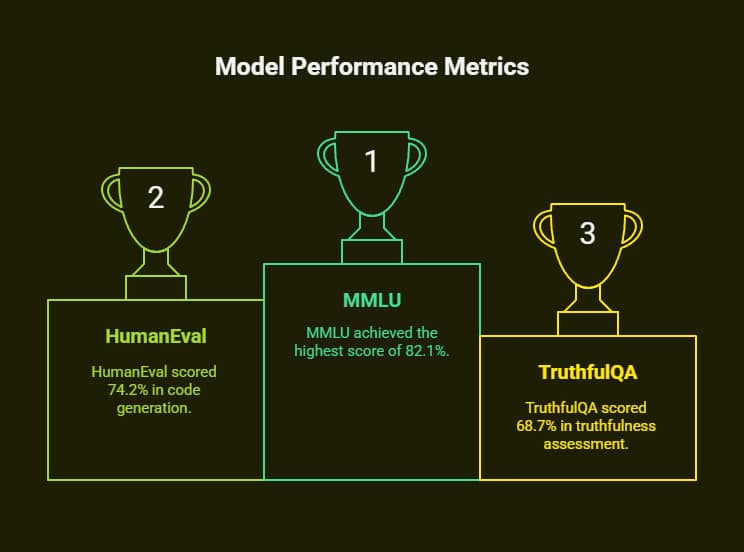

Benchmark Performance

- MMLU scored 82.1% for our model.

- HumanEval (Code Generation): 74.2%.

- TruthfulQA: 68.7%.

3. Mixtral 8x7B – Multilingual Efficiency Champion

Ideal for global companies and language apps.

Mixtral’s Mixture of Experts (MoE) design uses only 12.9B of its 46.7B parameters per token, resulting in high efficiency. My tests saw 6x inference time on the 70B, but the quality was similar to that of the 70B models.

Language Capabilities

The model works nicely in English, French, German, Spanish, Italian, and even code.

Performance Metrics

- The speed of inference is 30+ tokens/second in M3 Max.

- Memory Usage: around 26GB for 4-bit quantization

- Over the various multilingual benchmarks, it outperforms Llama 2 70B consistently.

4. Gemma 2 – Google’s Lightweight Powerhouse

This platform is useful for developers who want Google-backed reliability and fast inference.

Available Variants

- Gemma 2B: Entry-level applications.

- Gemma 9B: Balanced performance.

- Gemma 27B: Near-enterprise capabilities.

The 27B variant is fast and delivers quality results. Using only one Mac M-series, it produced consistent results with low hallucination, which is a problem I have faced with other models of this size.

Integration Advantages

- Works easily with primary frameworks of AI

- Optimized for hardware acceleration.

- Comprehensive, responsible AI toolkit.

5. Mistral 7B – The Reliable Workhorse

Ideal For Older Hardware, Consistent Performance, and European Language Tasks.

Mistral 7B worked efficiently on systems with just 16GB RAM over prolonged periods of time. Its sliding window attention mechanism is more efficient than standard transformer architectures.

Community Recognition

With over 9.2K GitHub stars, Mistral AI has established strong community trust through consistent model releases and transparent development practices.

6. Microsoft Phi-3 – Small Model, Big Performance

Best for edge deployment, resource-constrained applications, and entry-level hardware

Breakthrough Design: The success of Phi-3 indicates that quality matters more than quantity. Achieving a performance level similar to GPT-3.5 was made possible by synthetic data.

Real-World Testing

While testing Phi-3 Mini on mobile, it worked well on entry-level devices and didn’t give incoherent responses.

7. Qwen 2.5 32B – Global Language Leader

Ideal for global applications, multilingual approaches, and cultural context.

Qwen 2.5 supports over 29 languages. It has real cultural awareness, allowing it to do more than just translate languages. It also responds appropriately to different contexts.

Performance Data

The 32B model was developed by Alibaba Cloud and trained on 18 trillion tokens to strike an ideal balance between capability and hardware accessibility.

8. Solar 10.7B – Document Processing Specialist

Ideal for enterprise document workflows, HTML, and Markdown parsing and business applications.

Solar’s Depth Up-Scaling technique allows the model with 10.7B parameters to do better than models with 30B+ parameters, with 74.2 average score in the HuggingFace Open LLM Leaderboard.

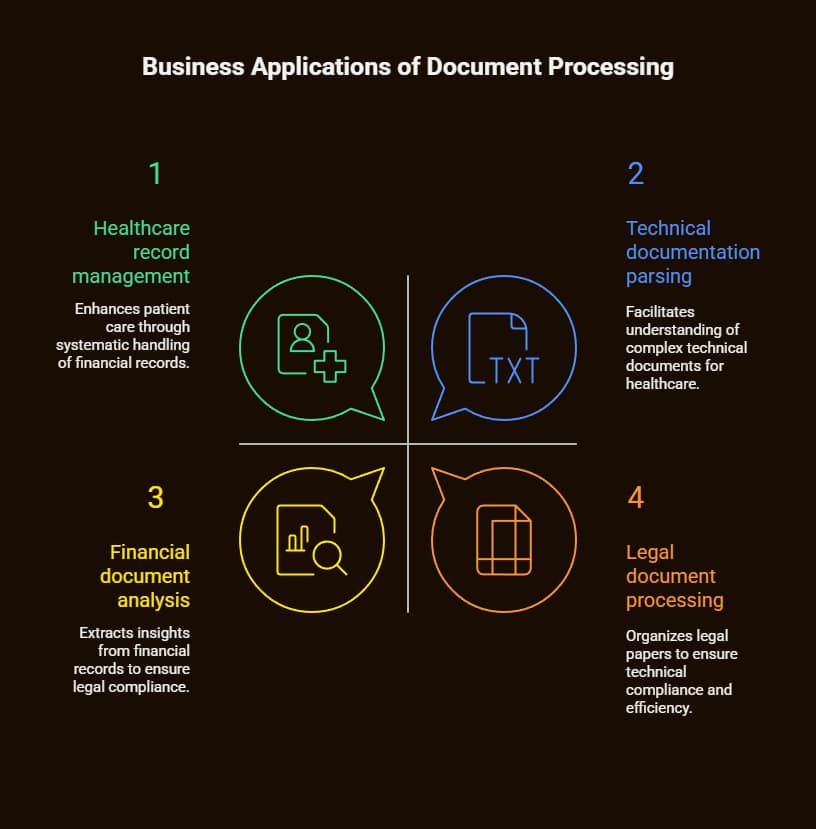

Business Applications

- Financial document analysis.

- Legal document processing.

- Healthcare record management.

- Technical documentation parsing.

9. LLaVA – Multimodal Pioneer

Perfect for image analysis, document OCR, and accessibility apps.

Multimodal Capabilities

- Image understanding and description.

- OCR text extraction.

- Visual question answering.

- Document analysis.

The 34B version of LLaVA accurately analyzed business documents. However, I personally feel that it is unsuitable for use in a production environment as it does not guarantee 100 % accuracy.

10. RWKV – The Memory Revolutionary

Ideal for lengthy context processing and settings with limited memory.

RWKV does not use attention at all. It has O(T) time complexity and O(1) space complexity. Thus, in theory, it could handle any context length.

The “3B” model can run on only 2GB of RAM while maintaining competitive performance for language modelling tasks.

Hardware Requirements and Performance Optimization

Based on extensive testing across different configurations.

Minimum Hardware Recommendations

- 16GB RAM Mistral 7B Phi-3 Gemma 2B-9B

- The models, Qwen 2.5 32B, Gemma 27B, and LLaVA 13B, all feature 32-gigabyte RAM.

- The recommended RAM for large computer variants is 64GB.

Performance Optimization Tips

- Use 4-bit quantization for memory-constrained deployments.

- Leverage Metal Performance Shaders on Apple Silicon.

- Implement model-specific optimization techniques.

- Consider distributed inference for larger models.

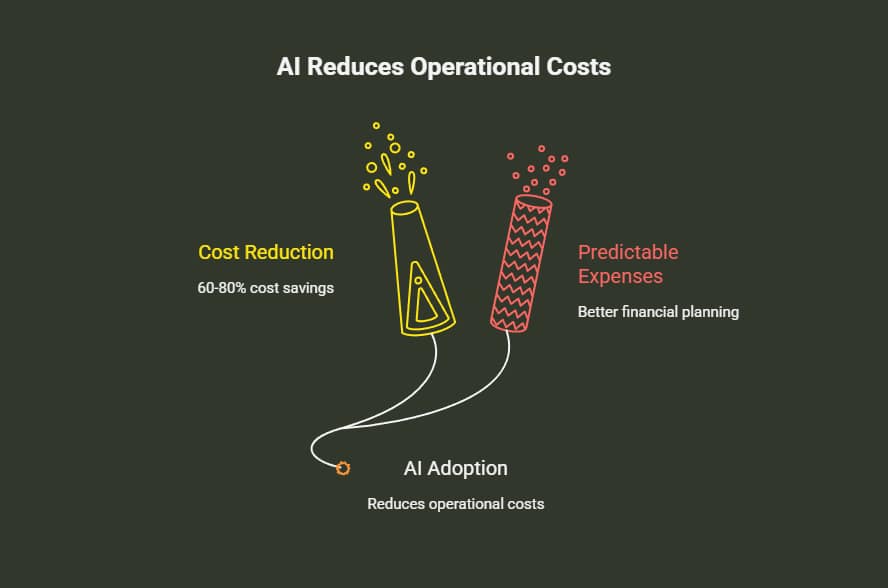

Business Impact and ROI Analysis

Firms using open source AI models gain big benefits.

Cost Benefits

- AI operational costs cut down by 60-80%.

- No longer charge per message models.

- Predictable infrastructure expenses.

Privacy and Control

- Complete data sovereignty.

- Customizable model behavior.

- Not compliant with stringent data regulations

Performance Insights

According to Stanford’s 2025 AI Index Report, open-weight models now achieve performance within 1.7% of leading proprietary models—a dramatic improvement from the 8% gap just one year ago.

How to Choose the Right Model for Your Needs

Here’s how I will choose after testing these models in different cases:

- Ideal for educational and professional applications requiring explainable AI.

- Llama 3.3 70B is best suited for enterprise content production and analysis.

- For Multilingual Projects, Mixtral 8x7B proves ideal for any international business requiring multilingual assistance.

- For resource-constrained tasks, Phi-3 or Mistral 7B show good results within limited hardware.

- If you have something specific, use Solar 10.7B for document processing, LLaVA for multimodality, and RWKV for long context.

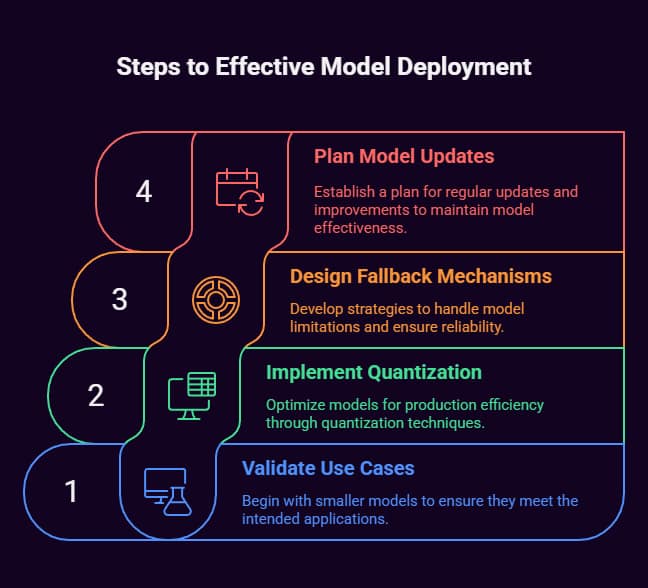

Implementation Strategies and Best Practices

Deployment Considerations

- Start with smaller models to validate use cases.

- Implement proper quantization for production efficiency.

- Design fallback mechanisms for model limitations.

- Plan for regular model updates and improvements.

Integration Approaches

- You can deploy using API based frameworks like Ollama, LM Studio.

- Integration through Hugging Face Transformers

- Tailor-made solutions for unique needs.

- The blend of multiple models in a hybrid approach.

Future Outlook and Emerging Trends

The open source AI landscape continues evolving rapidly. Key trends shaping 2025 include:

Technical Advances

- Architectural innovations enhanced efficiency.

- Multilingual and multimodal capabilities are bolstered.

- Better reasoning and problem-solving performance.

Community Growth

With over 1 million models hosted on Hugging Face, the ecosystem demonstrates unprecedented collaborative development.

Business Adoption

More and more companies are focusing on data privacy and cost control. This is making them choose open source models for their applications.

The open source models are bringing a new generation of AI. This is allowing more industries to innovate while keeping sensitive processes and data private. Choosing the right tools is important in any project. Choosing wisely is important to cut costs and save time. So, if you’re developing educational tools, business applications, or research platforms, these ten models are all you will need to accomplish your objectives easily and cost-effectively in 2025. Pick the one that will suit your use case best. Most importantly, remember: the best model is one that gives consistent results in your specific use case. Also, the model you choose must operate well within the constraints of your budget and hardware.