As soon as I first tested the voice synthesis capabilities in the dev environment for Character AI, the difference between static text replies and dynamic voice was clear. The platform had matured into voice-enabled, contextual companions from text-based chat systems that could keep up with thousands of replies. Beginnings as a whim sparked by Peppa Pig’s voice responses, AI personalities now present a $27.29 billion opportunity for developers and enterprises seeking more genuine validations.

New character-driven AI and voice technology is not just about technology. It changes the way we think about humans and computers. From my experience building custom chatbots for enterprise clients, I’ve observed that voice-enabled character AI systems consistently achieve 30-40% higher engagement rates than traditional text-only interfaces. This change involves more than convenience. It involves creating authentic and appealing digital characters that users want to revisit.

How do you create your own custom chatbot with Character AI and voice?

You can create custom voice-enabled character AI chatbots through Character AI, Voiceflow, or through any custom development frameworks. The Character AI platform already has more than 18 million unique chatbots. The chatbot also features a voice. This is part of the voice chatbot market projected to reach $15.5 billion by 2030. The process starts by selecting the platform on which your character will be developed. This is followed by defining the personality of the character. After that, you need to train the character using relevant data. After the training stage, you integrate voice synthesis technology. The final stage is deploying on your preferred channel.

Understanding Character AI’s Revolutionary Impact

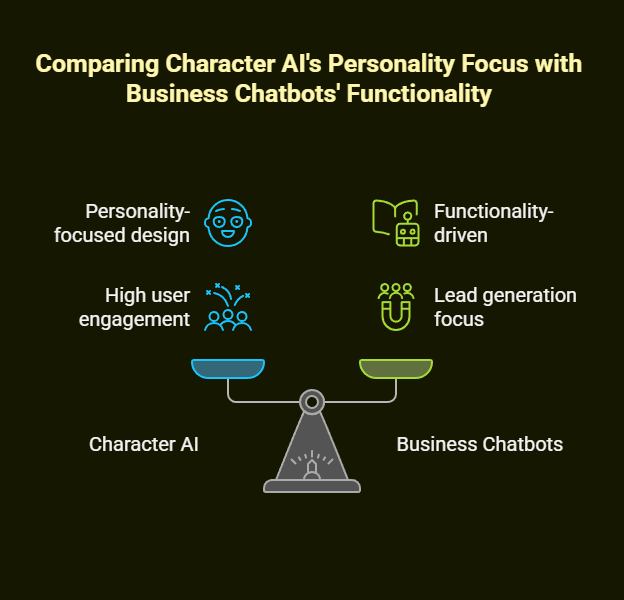

Character AI has changed how we view chatbots by focusing on personality over function. Character AI is not a business chatbot that generates leads or handles customer service. Rather, it is intended to create believable virtual versions of characters that a user enjoys chatting with. This approach has proven remarkably successful; the platform serves over 28 million monthly active users who spend an average of 75 minutes daily engaging with AI characters.

The Psychology Behind Character-Driven Interactions

Character AI did not become popular by accident. It aligns with core human traits. While looking at user engagement of different AI features, it appears character-based systems have more session times and more return visits than task-oriented bots. Users create a fake relationship with such AI characters because of their design.

When added to voice technology, this emotional connection becomes powerful. Characters sound more real through voice as it adds a dimension to their interaction that text cannot. The way we speak matters! Our tone, our pace, and our vocal personality traits create a more immersive experience that feels truly conversational and not transactional.

Market Dynamics And User Preferences

The character AI market has experienced explosive growth, with Character AI’s revenue growing from approximately $15.2 million in 2023 to $32.2 million in 2024. This is happening because today’s users are starting to become more demanding. People want stuff to be better and offer personalized and entertaining experiences rather than just something functional, which is the case with most websites.

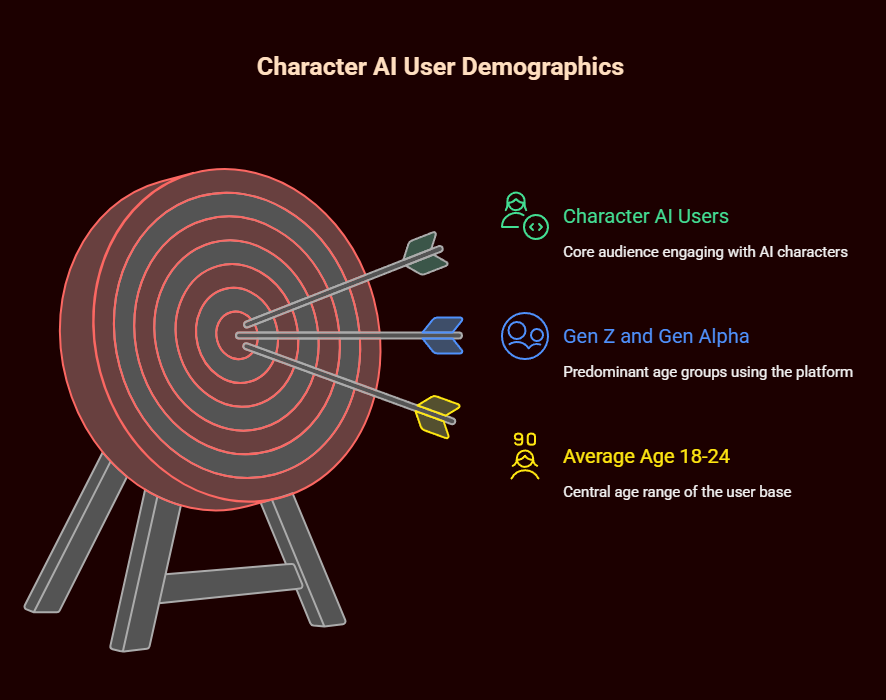

The demographic data reveals fascinating insights: over 50% of Character AI users are Gen Z or Gen Alpha, with an average age between 18 and 24. Young people grew up with AI. So, they want smart tools with personality.

Voice Technology Integration: From Text to Speech

The change from text-based chatbots to voice AI is a great leap in interaction quality. Voice chatbots are now able to understand multimodal input, make sense of context thanks to the voice cues of users, and respond with the right tone of voice. The voice-enabled chatbot market is projected to reach $15.5 billion by 2030, driven by advances in speech recognition and synthesis technologies.

Technical Architecture Of Voice-Enabled Character AI

To build a good voice character AI, you have to know the technical stack as well. At the core, you need:

Speech Recognition Engine

Converts user voice input to text. Modern systems like OpenAI’s Whisper or Google’s Speech-to-Text show “human-level” accuracy in many languages and accents.

Natural Language Processing

Understand the recognized text intents and contexts. Advanced NLP models preserve character and context over long interactions and chat convos.

Character AI Model

An engine that generates suitable responses based on the assigned character’s traits, background, and conversational history.

Text-To-Speech Synthesis

Converts the AI’s text responses back to voice output. Premium TTS systems can maintain consistent qualities that reinforce an individual’s character and personality.

Voice Cloning And Customization

More advanced implementations feature voice cloning technology to recognize each character’s distinct vocal style, amplifying the experience even further.

Real-World Implementation Challenges

Based on my experience building voice chatbots, I face many technical issues:

Latency Management

Voice interactions demand real-time responses. When conversing, users expect flow. Hence, the total processing time speech recognition + AI (which understands what the user said) + synthesis (which speaks back) should remain less than 1-2 seconds.

Context Preservation

Voice conversations flow differently from text chats. When people talk to themselves, they often break off and use little words. The system must possess the capability of managing these naturally flowing speech examples while preserving character consistency.

Emotional Intelligence

Voice carries emotional information that text lacks. Advanced character AI uses voice modulation to change its response in tone and content.

Platform Selection and Development Approaches

The answer to the question of whether you should go with an existing platform or invest in custom development depends on your technical needs, skill set, and budget.

Platform-Based Solutions

Character AI Platform

Creating a character is as easy as 123! Users can generate tailored characters, outline personality features, and deploy them without needing much technical knowledge. Nonetheless, tweaker choices are still not many, and to integrate voices, you need to install other applications.

Voiceflow

Provides sophisticated methods of conversion, with voice integration. Their visual flow builder enables complex character logic and supports various deployment channels. Great for business applications that will require a clear structure.

Chatbase

Business platforms with strong integration credentials. These models can be configured to have a personality and interact with the user conversationally with a prompt and knowledge base, but are not character AI, per se.

Custom Development Framework

Custom development offers flexibility that allows for maximum control and customization. The typical architecture involves:

Backend Services

Servers powered by Node.js or Python handling AI model interactions and user session management

AI Model Integration

APIs for language models (GPT-4, Claude, or a custom fine-tuned model) to generate responses from characters.

Voice Processing Pipeline

You can use it with speech recognition services, TTS engines, and voice cloning services.

Frontend Applications

Ways that the user interacts are with a web, mobile, or voice assistant interface.

Database Systems

Space for character names, dialogues, and user settings

Building Your Custom Character AI System: Create Your Own Custom Chatbot with Character AI and Voice

It takes a well-designed personality, curated training data, and iterative refinement to make an effective character AI.

Character Development and Personality Engineering

A comprehensive definition of personality is essential for successful character AI. In my writing development process, I create detailed character profiles.

Background And History

Character responses informed by backstory deepen conversations.

Personality Traits

Certain traits that affect communication style, interests, and behaviours.

Knowledge Domain

Areas of expertise and interests guide topics and response quality.

Communication Style

Word choices, type of sentences, style of the comic, and emotional expression patterns.

Relationship Dynamics

The character’s role for the user mentor, friend, expert, or entertainer helps define tone.

Training Data And Model Fine-Tuning

To make characters realistic, AI requires a lot of training. teacher’s note: The process typically involves:

Dialogue Collection

Create Conversations That Exhibit the Character’s Voice and Personality Traits You Want.

Response Pattern Analysis

Finding out the character’s language, what words they use, and how they talk.

Context-Aware Training

The model should be able to speak the same sentence in different contexts.

Safety And Appropriateness Filtering

We want to keep your characters true to themselves while avoiding harm.

Voice Integration and Synthesis

To make the voice-enabled personality from the text character, you need to carefully consider vocal features that emphasise personality traits.

Voice Selection

Pick base voices that are similar to the character’s age, background, and personality. The modern system offers a large library of voices.

Prosody Customization

Using speech patterns, lagging, and intonation that match the character. Energetic characters speak quickly and change their intonation a lot. Those who are calm speak at a steady pace and use measured tones.

Emotional Expression

Requiring the system to adjust voice quality depending on the type of conversation and the character’s emotion.

Real-World Applications And Use Cases

AI character systems that can be given voice commands find many applications apart from entertainment.

Entertainment and Media

The world of entertainment is increasingly utilizing character AIs for interactive storytelling, virtual influencers, and media experiences. Interactive fiction platforms use character AI to enable users to speak directly with story characters through voice conversations.

Virtual influencers powered by character AI have consistent personalities throughout their social media while engaging with their fans authentically. This technology allows for 24/7 interaction while keeping the brand voice and character consistent.

Education and Training

Apps use character AI to build fun tutors, historic figures, and subject experts for engaging learning. Students can get with a voice conversation of Shakespeare, Einstein, or any other historical character via their AI versions.

Corporate training programs employ character AI mentors to assist employees with intricate tasks, ensuring uniform information transfer while providing explanatory contents that suit each employee.

Customer Service and Support

Unlike conventional chatbots that concentrate solely on solving problems, characterful customer service AI creates engaging, memorable interactions. Companies report 27% higher customer satisfaction scores when using personality-driven AI assistants compared to generic chatbots.

The voice feature allows customers to be able to receive service even while their hands are busy because of multitasking.

Therapeutic and Wellness Applications

Character AI can help people who have anxiety, depression, or who feel lonely. It’s always there and supportive of them. The always available and custom-interacting style can enhance conventional therapy approaches.

We must think about ethics before making software that alters human perception to make sure we don’t suffer harm.

Technical Implementation Guide

Creating character AI systems that are ready for production involves taming infrastructure, performance, and UX.

Architecture and Infrastructure

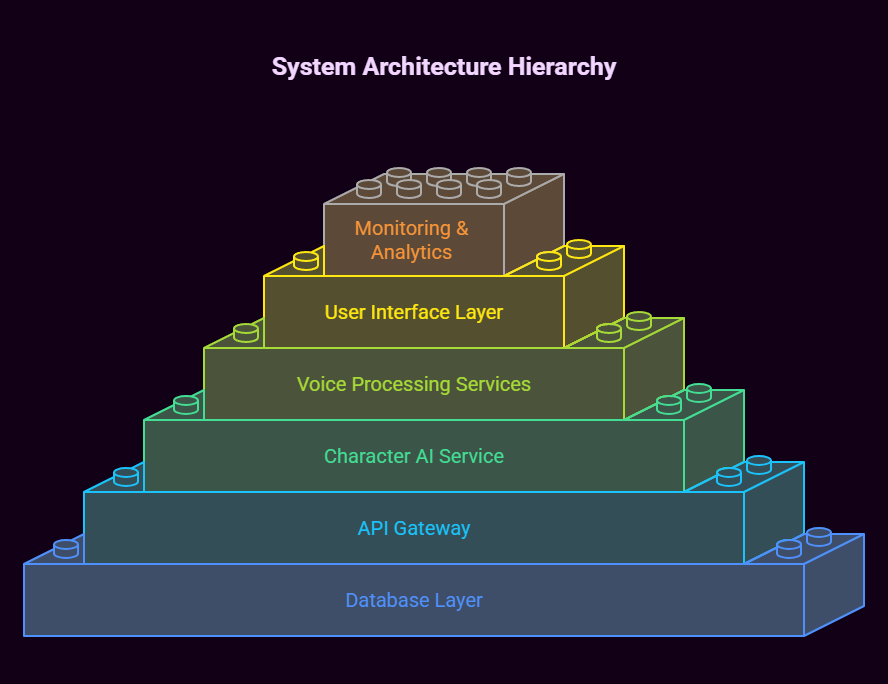

Modern character AI systems are usually designed using a microservices architecture.

User Interface Layer

User input and AI output are captured and rendered in web apps, mobile apps, or voice assistant interfaces.

API Gateway

It sends the requests to the right places. Moreover, it does authentication and does rate-limiting as well.

Character AI Service

A core service that manages user input, tracks character qualities, and generates responses.

Voice Processing Services

Have different services for different things, speech recognition and text-to-speech.

Database Layer

Saves character definitions, conversation history, user preferences, and analytics.

Monitoring And Analytics

System performance, user engagement, and conversation quality metrics are tracked.

Performance Optimization

Voice interactions demand real-time performance. Key optimization strategies include:

Response Caching

To cut down process time, pre-generate the regular responses and characters’ reactions

Streaming Synthesis

Starting to play the audio as soon as the text begins generating instead of waiting for the text to finish.

Model Optimization

Using models that are quantized or distilled for faster inference while maintaining the quality of the response.

Geographic Distribution

Deploy services near users to minimize network latency.

Quality Assurance and Testing

Character AI systems need methodical testing strategies:

Personality Consistency Testing

Automated tests show that character responses are consistent in their traits across conversation types.

Voice Quality Evaluation

Testing the quality, clarity, and emotional nature of the speech synthesis of characters.

User Experience Testing

We conduct user sessions regularly to understand the issues with conversation flow, misuse of responses, and unlocking engagement.

Safety And Appropriateness Monitoring

Monitoring of the behaviour of the character should be continuous.

Market Opportunities And Business Applications

Combining character AI with voice technology offers businesses new opportunities across different sectors.

Enterprise Applications

Progressive companies are using character AI to train, onboard, and collate knowledge internally. AI mentors help organizations deliver more consistent training to employees, adapting to their needs and learning styles.

Giving virtual assistants a distinct personality improves employee engagement with internal systems. Instead of dealing with generic interfaces, employees would interact with AI peers having personalities, areas of specialization, and communication styles.

Content Creation and Media

Character AI enables innovative interactive content that allows audiences to communicate and engage with fictional characters, celebrities, or brand personalities. Media companies create AI versions of popular characters to engage fans while they wait.

Brands use character AI for influencer and content creators to engage the audience when humans are not available. It helps keep the brand voice and personality.

Healthcare and Wellness

AI health coaches are one of the healthcare applications whose friendly nature helps maintain a medication schedule, exercise routine, and wellness goals.

Mental health apps feature supportive AI companions. These companions are available 24/7. They don’t judge. They also offer coping strategies. They do not abuse the relationship.

Educational Technology

Education technology companies are integrating character AI tutors who adjust their teaching style to suit individual students’ needs with engaging, encouraging personalities that inspire students to keep learning.

Language apps use AI characters that can talk like native speakers in real-world scenarios to hone your conversation skills.

Ethical Considerations and Responsible Development

The ethical aspects, safety of users, and design of the Character AI must be looked into.

Privacy and Data Protection

Character AI systems enabled by voice command process sensitive personal information such as chat content, voice quality, and user behavior. Responsible development requires:

Data Minimization

Getting only what’s needed to improve character function and user experience.

Consent and Transparency

Allow users to understand how we use their data and let them accept or refuse.

Security Measures

Encryption and storage mechanisms to secure user messages and user personal information, and an access mechanism.

Retention Policies

Users should be clear on how their data is retained, deleted, and controlled.

Emotional Manipulation and Dependency

Character AI is interesting, but it makes us worry about emotional manipulation and unhealthy dependence. Responsible development includes.

Healthy Boundaries

Develop designs that allow for balanced use and do not encourage over-reliance on AI relationships.

Transparency Indicators

Users will get informed consent that they are interacting with an AI and not a human.

Professional Limitations

Give suitable limitations on therapeutic or medical advice in supporting users’ feelings.

Usage Monitoring

Tools that enable users to monitor their usage patterns and help them maintain healthy relationships.

Content Safety and Appropriateness

Character AI systems must balance authentic personalities with safe content.

Content Filtering

We have strong systems to limit harmful, inappropriate, or misleading information while preserving character and personality.

Age-Appropriate Design

Younger users require special considerations, including content limitations and parental control features.

Cultural Sensitivity

Understanding how cultures communicate, use humor, and which topics will be appropriate on a global scale.

Bias Mitigation

Testing and adjusting regularly so that character responses aren’t discriminatory.

Future Trends and Technological Developments.

The character AI and voice technology space is changing quickly due to improved language models, better synthesis technology, and advances in user designs.

Multimodal Integration

In the future, character AIs will be able to process and integrate various types of input. This may include speech, text, visual gestures, and even body language. This approach creates more true-to-life interactions between characters, making it more realistic.

Soon, systems will be able to ascertain a user’s emotional state. They will do this by analyzing tone of voice, facial expressions, and what is being said. They will make characters respond appropriately, making the interaction more empathetic.

Real-Time Personalization

Machine learning advances now allow a character to change in real-time based on the user’s personal preferences, previous conversations, and behavior. Every character can develop unique relationships with each user while remaining true to themselves.

Dynamic personality systems will let characters become different versions of themselves as time goes on, simulating real-life relationship evolutions and individual growth.

Integration with Physical Environments

More and more smart home devices, cars, and office spaces now feature character AI. With their experiential consciousness, characters persist as companions in multiple physical and virtual settings, while remembering their conversation history and relationship status.

Augmented and virtual reality platforms combine voice and visual interaction for character AI, which takes place within a photo-realistic space.

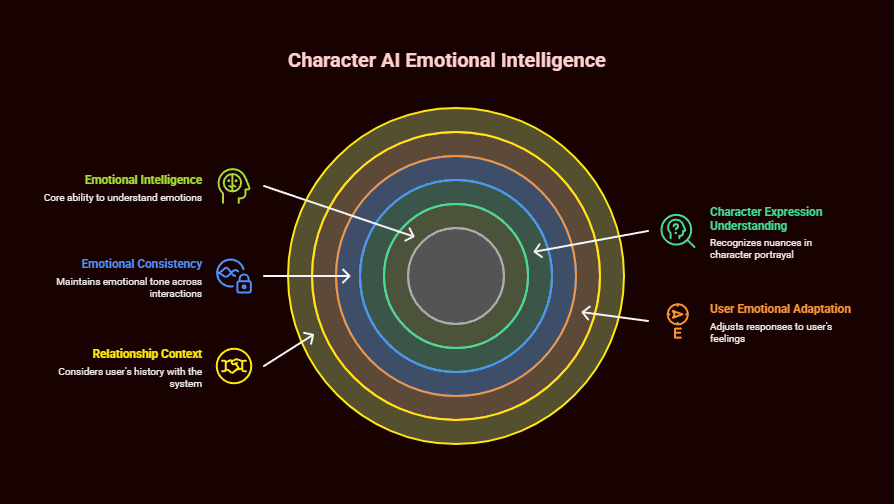

Advanced Emotional Intelligence

The next-generation character AI systems exhibit great emotional intelligence and adeptness in understanding and responding to the subtleties of character expression.

These systems will keep the emotions consistent from one conversation to the next while adapting to the user’s emotional state and the user’s relationship with the system.

Implementation Roadmap and Best Practices

To put a character AI voice successfully into place, you need to have a structured plan in mind.

Phase 1: Foundation and Planning

Requirements Analysis

Clearly identify how you wish to use the Character AI, who will be using it, and what the success metrics for the project.

Character Design

Make a detailed character profile that summarizes their personality traits, background info, communication style, and expertise areas.

Platform Selection

Consider platforms and development options based on customization, technology, and budget.

Technical Architecture

Create a system architecture that fulfills needs for scale, performance, and integration.

Phase 2: Development and Integration

Prototype Development

Develop a basic version of a character AI to try out core features and gather feedback.

Voice Integration

Use the character’s voice and generational speech and underline recognition.

Testing And Refinement

Test for personality consistency, chat quality, and technical performance.

Safety Implementation

Use content filtering, safety, and monitoring to ensure responsible operation.

Phase 3: Deployment And Optimization

Controlled Launch

A limited release to a small percentage of users for evaluation.

Performance Monitoring

Monitor the performance of systems, user engagement metrics, and good conversation quality indicators.

Iterative Improvement

Updates will be made regularly based on user feedback, usage, and performance.

Scale Preparation

Improve infrastructure and processes for wider dissemination based on findings.

Phase 4: Growth and Enhancement

Feature Expansion

Based on user demand, implement new capabilities, conversation topics, and interaction modalities.

Personalization Enhancement

Integrate sophisticated personalization capabilities that modify character behavior according to user individualization.

Platform Integration

To reach as many users as possible, deployment should be expanded across various channels and platforms.

Community Building

Create user groups around characters to offer them feedback and connect with them.

Creating customized character AI with voice offers a great opportunity to build attractive and memorable digital experiences that users will enjoy. The technology has matured enough for real-world professional implementations. However, it is still accessible enough for a small project or experiment.

It’s vital to pay close attention to character, mechanics, and ethics. Implementations that feel the most natural are those that reflect the real personality traits of the character and have been designed to portray the personality safely.

As users continue to expect personalized, engaging interactions, the market opportunity continues to grow. When organizations put character AI to successful use, they improve customer engagement to an improved level, so a competitive advantage is felt.

With character AI with voice, whether building entertainment apps, educational tools, or business solutions, we can create powerful digital people that we want our users to love. Value and safety should come ahead of character, and all of them can be enabled.